Ethics, Compliance and Audit Services

University of California Presidential Working Group on Artificial Intelligence Standing Council (AI Council)

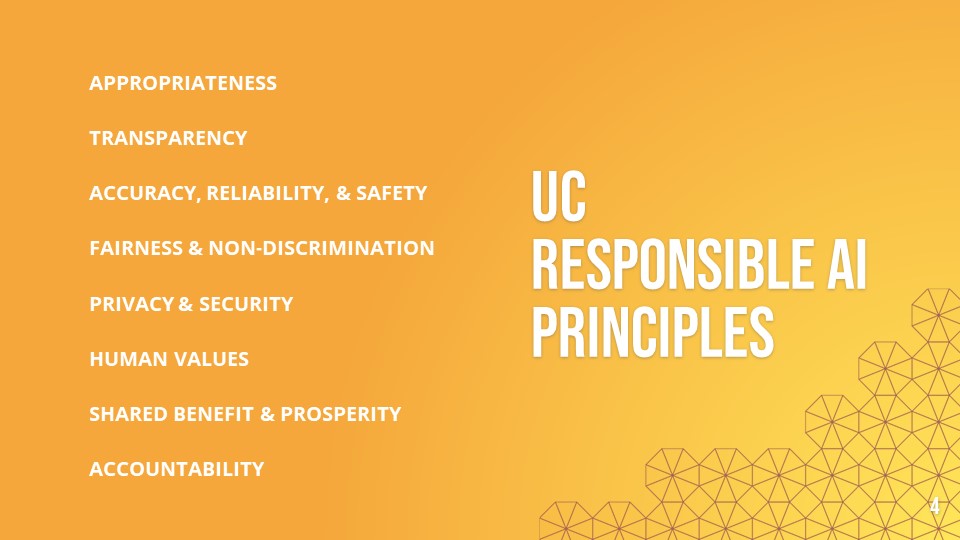

On October 6, 2021, President Michael V. Drake adopted the Presidential Working Group on Artificial Intelligence’s Responsible AI Principles and related recommendations to guide UC’s development and use of AI in its operations. The recommendations seek to:

- Institutionalize the UC Responsible AI Principles in procurement, development, implementation, and monitoring of AI-enabled technologies deployed in UC services;

- Establish campus-level councils and support coordination across UC that will further the principles and guidance developed by the Working Group;

- Develop an AI risk and impact assessment strategy; and

- Document AI-enabled technologies in a public database.

In May 2022, President Drake established the UC Presidential Working Group on Artificial Intelligence Standing Council (AI Council) to assist in the implementation of the “UC Responsible AI Principles”.

INDEX

AI Council Co-chairs

Charges

Co-chairs

Alex Bui, PhD

Alex Bui, PhD

Director, Medical & Imaging Informatics Group

Director, Medical Informatics Home Area

Professor, Departments of Radiological Sciences, Bioengineering & Bioinformatics

David Geffen Chair in Informatics

Alex Bui received his PhD in Computer Science in 2000, upon which he joined the UCLA faculty. He is now the Director of the Medical & Imaging Informatics (MII) group. His research includes informatics and data science for biomedical research and healthcare in areas related to distributed information architectures and mHealth; methodological development, application, and evaluation of artificial intelligence (AI) methods, including machine/reinforcement learning; and data visualization. His work bridges contemporary computational approaches with the opportunities arising from the breadth of biomedical observations and the electronic health record (EHR), tackling the associated translational challenges. Dr. Bui has a long history of leading extramurally funded research, including from multiple different National Institutes of Health (NIH) institutes (NCI, NLM, NINDS, NIBIB, etc.). He was Co-Director for the NIH Big Data to Knowledge (BD2K) Centers Coordination Center; and Application Lead for the NSF-funded Expeditions in Computing Center for Domain-Specific Computing (CDSC), exploring cutting-edge hardware/software techniques for accelerating algorithms used in healthcare. He led the NIH-funded Los Angeles PRISMS Center, a U54 focused on mHealth informatics. He is now Director of UCLA’s Bridge2AI Coordination Center, a landmark NIH initiative to advance the use of AI/ML methods. Dr. Bui is Program Director of multiple separate NIH TL1/T15/T32s at UCLA in the areas of biomedical informatics and data science; Director for the Medical Informatics Home Area in the Graduate Program in Biosciences; Co-Director of the Center for SMART Health; and serves at the Senior Associate Director for Informatics for UCLA’s Clinical and Translational Science Institute (CTSI). He also Co-Chairs the University of California (UC) AI Council.

Alexander Bustamante

Alexander Bustamante

Senior Vice President

University of California, Office of the President

Alexander.Bustamante@ucop.edu

Alexander A. Bustamante is the Senior Vice President and Chief Compliance and Audit Officer for the University of California system. He leads the Office of Ethics, Compliance and Audit Services and oversees the University's corporate compliance, investigative, and audit programs. Most recently, Mr. Bustamante and his team have dedicated significant effort to current and emerging compliance issues related to research security and emergent technology (Foreign Influence | UCOP). His office also routinely conducts cyber-risk audits across the system to strengthen UC’s critical infrastructure, protect federally funded research, and safeguard UC’s large data sets used in operations and research, including machine learning and artificial intelligence (e.g., Center for Data-driven Insights and Innovations | UCOP). As co-chair of the UC Presidential Artificial Intelligence Council, he and his team created guidelines for the use of AI applications within the UC system (Artificial Intelligence | UCOP). Prior to coming to the University of California, Mr. Bustamante served as the Inspector General for the Los Angeles Police Department, where he was responsible for providing independent oversight of the Department. Mr. Bustamante also served as an Assistant United States Attorney for the Central District of California from 2002 to 2011, where he received the United States Attorney General's Award for Exceptional Service, the Department of Justice’s highest award, for handling a landmark case involving the federal government’s first use of civil rights statutes to combat racially motivated gang violence against African Americans. Mr. Bustamante received his Juris Doctor degree from the George Washington University Law School and his Bachelor’s degree in Rhetoric from the University of California, Berkeley.

Subcommittees

Innovation and Impact

The Innovation and Impact Subcommittee works to advance high-impact AI initiatives across UC campuses, promote collaboration, sharing of best practices, development of an AI inventory, and to align UC efforts with state, federal, and industry initiatives for the responsible and transformative use of AI.

Risk, Transparency, and Awareness

The AI Council Subcommittee on Risk, Transparency, and Awareness helps stakeholders across the University of California understand and assess the risk associated with the use of artificial intelligence, identify strategies to mitigate risk, and connect to the appropriate resources to help address risk. The Subcommittee fosters transparency within the University of California community, and to the public, regarding UC’s current and potential uses of AI. By enhancing transparency, UC aims to better assess potential risks and opportunities, evaluate experiences and outcomes and inform future initiatives that ensure responsible AI use and promote efficiency, civil liberties, autonomy and equitable outcomes. The subcommittee fosters a knowledgeable and engaged community that is well-equipped to navigate, contribute to, and harness the evolving field of AI application in higher education. The Subcommittee focuses on coordinating and promoting comprehensive engagement and awareness programs for the UC system by sharing up-to-date information, events, and best practices related to AI within the UC community.